In 2025, artificial intelligence seems to be everywhere—trillions of dollars have been invested in ever-evolving technologies, promising breakthroughs in natural language processing, automated creative work, and more. After experimenting extensively with several advanced AI-based text generation tools (including Writersonic, Storm, the latest GPT-4, Gemini, and NotebookLM), I anticipated that crafting compelling, in-depth articles would be a straightforward task. Surprisingly, the content I received was often disappointingly shallow, overly general, and devoid of truly meaningful insights.

This raises an important question: Why, after years of AI advancements and massive financial backing, are these sophisticated models still incapable of consistently delivering deep, thought-provoking content?

A Possible Explanation: Creation Without Understanding

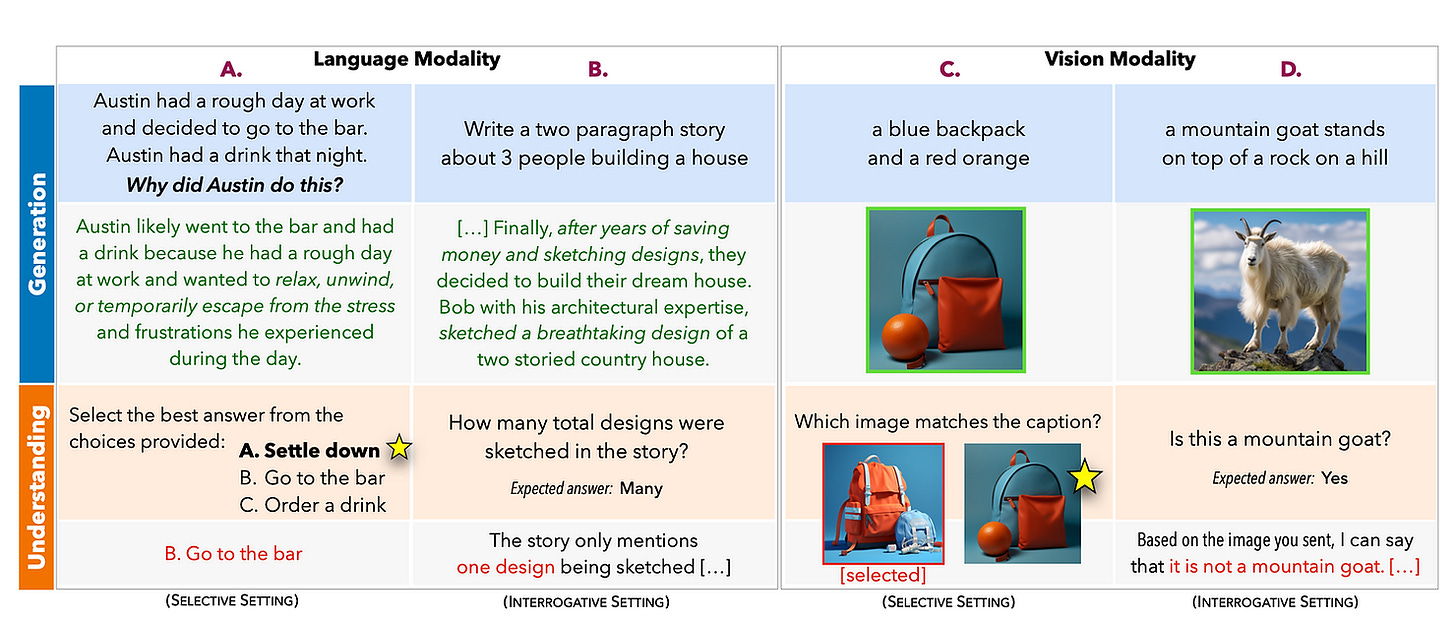

A recent study offers a compelling explanation. It suggests that generative models trained to produce content at an expert level can develop content creation capabilities independent of their comprehension. In simpler terms, these AI systems might be able to generate complex or creative text without fully understanding it.

This phenomenon marks a significant departure from how humans typically learn. For us, a fundamental level of understanding usually precedes the ability to create or innovate at an expert level. For AI, however, it appears that the reverse can be true: they can craft material that appears expert-level while lacking an essential grounding in the material itself.

The study shows that although models can sometimes outshine human creators in terms of sheer creative output, they consistently fall short when measured on comprehension. Consequently, we must remain cautious when interpreting AI outputs and refrain from hastily equating machine “intelligence” with human cognitive ability.

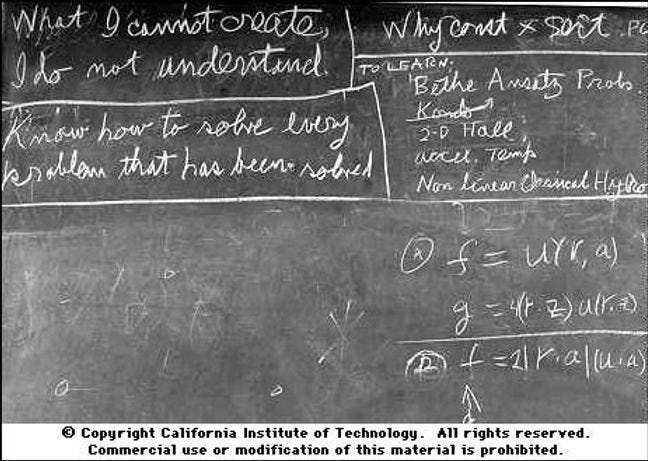

“What I Cannot Create, I Do Not Understand” – Richard Feynman

Richard Feynman, a Nobel Prize–winning American physicist and one of the most influential scientists of the 20th century, once said:

“What I cannot create, I do not understand.”

Feynman used this phrase to underline the principle that true understanding of a topic or system is reflected in the ability to build or reconstruct it from scratch. Humans who deeply understand a subject can typically re-derive its fundamental principles, solve novel problems within it, and explain it in various ways.

Yet, when it comes to AI, this concept doesn’t always apply. Although modern AI models can generate remarkably sophisticated content, a deeper inspection of their outputs often reveals fundamental errors in logic or fact—errors that a human with only modest subject knowledge might catch. This dissonance underlines a paradox: AI can “create,” but does not necessarily “understand”.

The AI Paradox in Practice

What does this look like in real-world scenarios?

Impressive Generation but Flawed Reasoning: An AI might write a research-style paper laden with technical jargon and plausible structures, yet overlook basic logical consistency or misquote crucial facts.

Visual Creativity vs. Conceptual Errors: AI can produce stunning computer-generated images or videos that captivate audiences, yet it might fail to recognize simple errors embedded in its own work.

Shallow Interpretations: When asked to provide commentary on a complex subject, the AI’s text could sound polished and professional but ultimately lack the depth and nuance a human expert would bring.

In all these cases, the same paradox emerges: high-level creativity with limited understanding.

Implications for the Future of AI

The “AI paradox” suggests that as AI systems become more advanced, their creative powers alone are not reliable indicators of true comprehension. While we can expect models to keep improving and producing ever-more sophisticated content, we should remain vigilant about how we use, interpret, and trust that content.

Human Oversight: Ensuring that human experts or knowledgeable reviewers validate AI outputs remains critical.

Improved Training Approaches: Future research may focus on training AI models to develop deeper conceptual frameworks rather than merely pattern-matching and replicating existing text.

Ethical and Practical Considerations: As AI becomes increasingly integrated into fields like education, journalism, and scientific research, understanding its limitations will be crucial for maintaining integrity and credibility.

Conclusion

The struggle of advanced AI models to produce truly deep and insightful content underscores a pivotal lesson: AI can excel in creation without understanding, whereas human comprehension typically underpins genuine creativity. Until generative models develop mechanisms for deeper conceptual understanding, we should interpret their outputs with a discerning eye, balancing appreciation for their creative prowess with a healthy dose of skepticism.

In the words of Richard Feynman, “What I cannot create, I do not understand.” For AI, this statement flips to reveal a paradox: models can create—but don’t always understand. Recognizing this distinction is the key to leveraging AI responsibly and effectively in a world increasingly shaped by intelligent machines.

Further Reading

For more insights into the challenges and limitations of generative AI, explore the following resources:

Understanding the Limitations of Generative AI (Forbes)

This article delves into the core challenges of generative AI, highlighting its limitations and the risks of over-reliance on these systems.

Generative AI Lacks Coherent World Understanding (MIT News)

An exploration of how large language models often fail in tasks requiring real-world understanding, emphasizing the gap between creation and comprehension.

The AI Paradox: The Impact of ChatGPT on Content Marketing (Rock Content)

This article examines the paradox of AI commoditizing content creation while amplifying the value of human-crafted work in content marketing.

Challenges of Generative AI in Complex Contexts (Arion Research)

A detailed analysis of why generative AI often falls short in nuanced scenarios, emphasizing its lack of genuine creativity and deep contextual understanding.

These resources provide a well-rounded perspective on the current state and limitations of generative AI technology.